Most New Bloggers are confused about using the robot.txt and custom robots header tags in Blogger Themes. If you are unsure and do not how to use these settings, the best option is not to use them because using the wrong SEO Settings will make it difficult for your Posts and Pages to rank in search engines.

Doing this will enable the Default Blogger Settings, which in my opinion are optimal for getting your Blog indexed. In this post, I have given a simple step-by-step tutorial using images for using these settings.

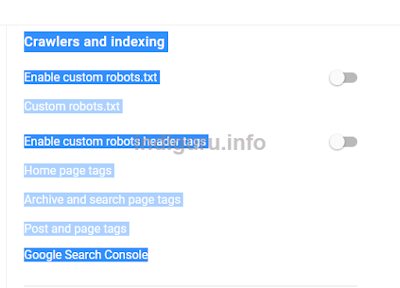

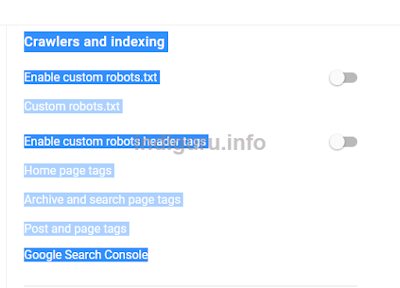

Go to Settings: Crawlers and indexing:

Enable custom robots.txt: Grey out everything as shown in the image given below and save. If you have added any custom robots.txt in this section remove it.

This will re-enable the default Blogger robot.txt

Doing this will enable the Default Blogger Settings, which in my opinion are optimal for getting your Blog indexed. In this post, I have given a simple step-by-step tutorial using images for using these settings.

Go to Settings: Crawlers and indexing:

Enable custom robots.txt: Grey out everything as shown in the image given below and save. If you have added any custom robots.txt in this section remove it.

This will re-enable the default Blogger robot.txt

User-agent: Mediapartners-Google Disallow: User-agent: * Disallow: /search Allow: / Sitemap: https://www.yoursite.com/sitemap.xml

Comments

Post a Comment